Last updated:

Why Trust Cryptonews

Why Trust Cryptonews

AU10TIX, a global identity verification and management company, reported that the increase in law enforcement intervention, among other factors, has pushed ID fraudsters toward crypto markets.

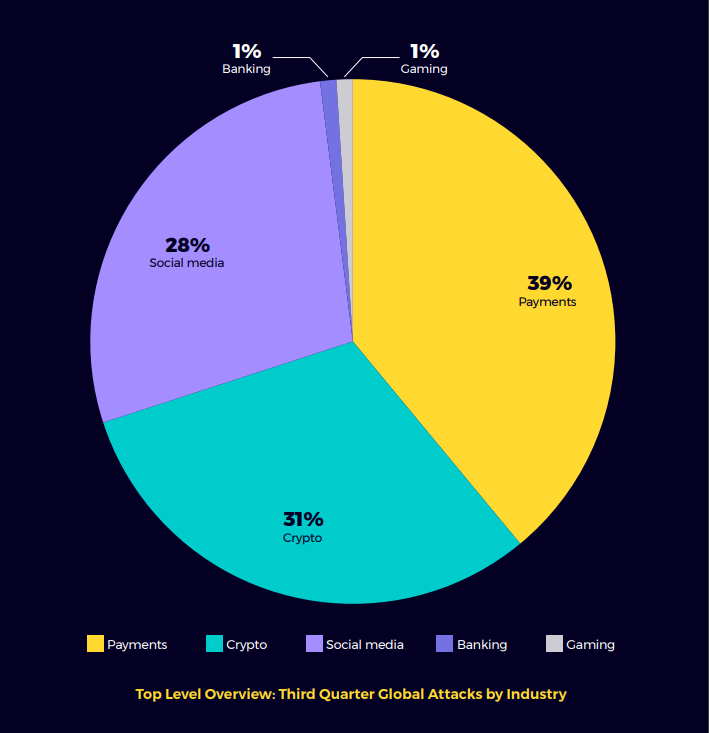

In the third quarter of this year, the payments industry continued to be the most targeted sector, said the its Q3 2024 Global Identity Fraud Report.

However, bad actors have now turned to the crypto market, which accounted for 31% of all Q3 attacks.

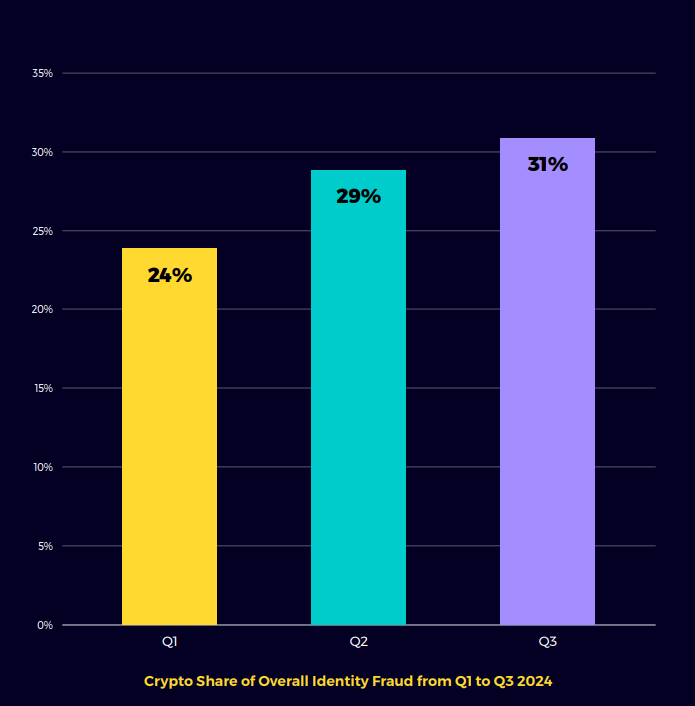

The report found that this year alone, the crypto share of overall identity fraud increased from 24% in the first quarter to 31% in the third.

Per the researchers, the payments sector has become “a tougher target.” Several factors have contributed to fraudsters becoming “discouraged” and forced to shift their attention to less regulated sectors like the crypto market.

These factors include:

- self-regulation;

- increased INTERPOL and law enforcement interventions;

- higher Bitcoin price, which attracts more fraud and money laundering.

Notably, there was a temporary dip in fraud after Markets in Crypto-Assets Regulation (MiCA) finally came into force earlier this year.

However, the attacks rebounded, and “criminals quickly adapted,” the researchers said.

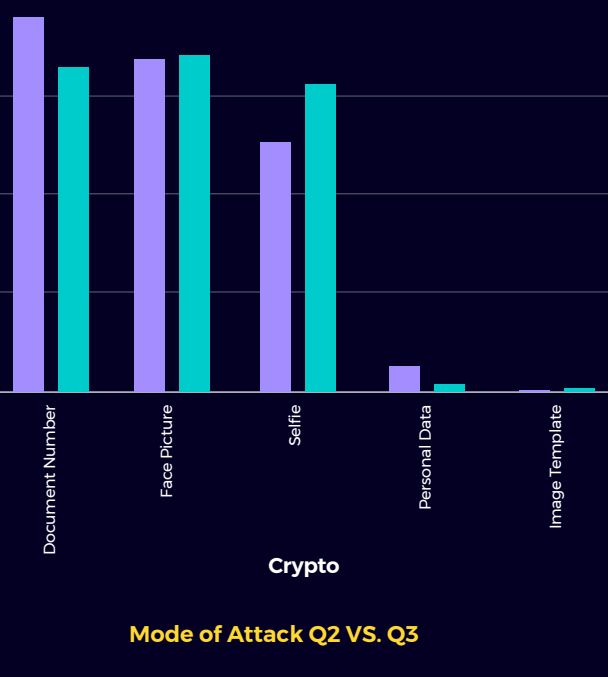

Also, there was notable rise in in the selfie mode of attack within the crypto sector between Q2 and Q3, as seen in the image below.

Overall, the report found that the rapid AI developments enabled “the industrialization of identity fraud.”

Bad actors are now launching “automated mega-attacks of thousands of false identities targeting payments, crypto, and social media companies all over the world.”

Fraudsters create fake profiles using real and fabricated data. Then, bots automate the fraud and spread fake accounts.

Fake profiles also open fraudulent accounts in banking, crypto, and payments, which are often linked to money laundering.

Surge in 100% Deepfaked ‘Selfies’

The report warned about a “faster-than-anticipated leap in the sophistication of AI-powered impersonation bots and deepfake technology.”

Particularly, there has been a rise in synthetic selfies and fake documents that have managed to bypass “some leading” verification systems.

While selfies used to be one of the least-used fraud methods, novel and “extremely sophisticated technology” enabled fraudsters to create 100% deepfaked “selfies.”

These images match the synthetic IDs that they use to trick automated KYC processes, said the researchers.

Bad actors utilize face swaps, as well as face synthesis, with AI generating a new face and adapting facial features to deceive both the viewers and the systems.

Now they already have experience using face morphing to combine faces into a new one and are using deepfake technology to create real-time videos.

“Fraudsters are evolving faster than ever, leveraging AI to scale and execute their attacks, especially in the social media and payments sectors,” said Dan Yerushalmi, CEO of AU10TIX.

“While companies are using AI to bolster security, criminals are weaponizing the same technology to create synthetic selfies and fake documents, making detection almost impossible.”

The report warns that as fraudsters get smarter, companies must do the same and embrace new detection methods to protect themselves and their customers.

Besides the synthetic selfies increase, AU10TIX found a 20% rise in the use of “image template” attacks.

This suggests that bad actors utilize AI to use the same ID template to rapidly create variations of synthetic identities: photos, document numbers, and other personal information.

“The regional hotspots driving this change are APAC and North America,” said the report. “The surges have not been observed in other regions.”